From Covert To Overt: UK Govt & Businesses Unleash Facial Recognition Technologies Across Urban Landscape

SUNDAY, AUG 06, 2023 - 08:10 AM

Authored by Nick Corbishley via NakedCapitalism.com,

The Home Office is encouraging police forces across the country to make use of live facial recognition technologies for routine law enforcement. Retailers are also embracing the technology to monitor their customers.

It increasingly seems that the UK decoupled from the European Union, its rules and regulations, only for its government to take the country in a progressively more authoritarian direction. This is, of course, a generalised trend among ostensibly “liberal democracies” just about everywhere, including EU Member States, as they increasingly adopt the trappings and tactics of more authoritarian regimes, such as restricting free speech, cancelling people and weakening the rule of law. But the UK is most definitely at the leading edge of this trend. A case in point is the Home Office’s naked enthusiasm for biometric surveillance and control technologies.

This week, for example, The Guardian revealed that the Minister for Policing Chris Philip and other senior figures of the Home Office had held a closed-door meeting with Simon Gordon, the founder of Facewatch, a leading facial recognition retail security company, in March. The main outcome of the meeting was that the government would lobby the Information Commissioner’s Office (ICO) on the benefits of using live facial recognition (LFR) technologies in retail settings. LFR involves hooking up facial recognition cameras to databases containing photos of people. Images from the cameras can then be screened against those photos to see if they match.

The lobbying effort was apparently successful. Just weeks after reaching out to the ICO, the ICO sent a letter to Facewatch affirming that the company “has a legitimate purpose for using people’s information for the detection and prevention of crime” and that its services broadly comply with UK Data Protection laws, which the Sunak government and UK intelligence agencies are trying to gut. As the Guardian report notes, “the UK’s data protection and information bill proposes to abolish the role of the government-appointed surveillance camera commissioner along with the requirement for a surveillance camera code of practice.”

The ICO’s approval gives legal cover to a practice that is already well established. Facewatch has been scanning the faces of British shoppers in thousands of retail stores across the UK for years. The cameras scan faces as people enter a store and screens them against a database of known offenders, alerting shop assistants if a “subject of interest” has entered. Shops using the technologies have placed notices in their windows (such as the one below) informing customers that facial recognition technologies are in operation, “to protect” the shop’s “employees, customers and stock.” But it is far from clear how many shoppers actually take notice of the notices.

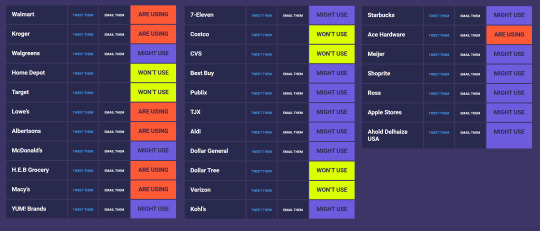

As examples of government outsourcing go, this is an extreme one. According to the Guardian, it is happening because of a recent explosion in shoplifting*, which in turn is due to the widespread immiseration caused by the so-called “cost of living crisis” (the modern British way of saying “runaway inflation”).* As NC readers know, runaway inflation is partly the result of corporate profiteering. So far, 400 British retailers, including some very large retail chains (Sports Direct, Spar, the Co-op), have installed Facewatch’s cameras. As the Guardian puts it, the government is “effectively sanctioning a private business to do the job that police once routinely did.”

From Covert to Overt

It is not just retailers that are making ample use of LFR technologies; so, too, is the British police. As I reported in my book Scanned, law enforcement agencies in the UK, specifically London’s Metropolitan Police Service and South Wales Police, and the US have been trialling live facial recognition (LFR) in public places for a number of years. LFR has been used in England and Wales for a number of events including protests, concerts, the Notting Hill Carnival and also on busy thoroughfares such as Oxford Street in London.

In 2019, Naked Capitalism cross-posted a piece by Open Democracy on how the new, privately owned Kings Cross complex in London had used facial recognition cameras to identify pedestrians crossing Granary Square. Argent, the developer and asset manager charged with the design and delivery of the site, then ran the data through a database supplied by the Metropolitan Police Service to check for matches. Kings Cross was just one of many parts of London where unsuspecting pedestrians were having their biometric data captured by facial recognition cameras and stored on databases.

The UK is already one of the most surveilled nations on the planet. By 2019, it was home to more than 6 million surveillance cameras – more per citizen than any other country in the world, except China, according to Silkie Carlo, director of Big Brother Watch.

Until now, the police’s use of LFR has been pretty much covert and each time information has leaked out about that use, there has been a public outcry; now, it is becoming overt. Policing Minister Chris Philip is encouraging police forces across the country to make use of LFR for routine law enforcement, as reports an article by BBC Science Focus (which, interestingly, was removed form the web but not before being preserved for posterity on the Wayback Machine):

Since police offices already wear body cameras, it would be possible to send the images they record directly to live facial recognition (LFR) systems. This would mean everyone they encounter could be instantly checked to see if they match the data of someone on a watchlist – a database of offenders wanted by the police and courts.

The Home Office’s recommendations for much broader use of LFR contradicts the findings of a recent study by Minderoo Centre for Technology and Democracy, at the University of Cambridge, which concluded that LFR should be banned from use in streets, airports and any public spaces – the very places where police believe it would be most valuable.

Unsurprisingly, consumer groups and privacy advocates are up in arms. The civil liberties and privacy campaigning organisation Big Brother Watch has organised an online petition to call on Home Secretary Suella Braverman and Metropolitan Police Commissioner Mark Rowley to stop the Met from using LFR. As of writing, the petition is on the verge of reaching its target number (45,000 signatures).

“Live facial recognition is a dystopian mass surveillance tool that turns innocent members of the public into walking ID cards,” says Mark Johnson, advocacy manager at Big Brother Watch:

Across seven months, thirteen deployments, hundreds of officer hours, and over half a million faces scanned in 2023, police have made just three arrests from their use of this intrusive and expensive mass surveillance tool… Rather than promote its use, the Government should follow other liberal democracies around the world that are legislating to ban this Orwellian technology from public spaces.

Those liberal democracies include the European Parliament which, to its credit, recently decided to ban the use of invasive mass surveillance technologies in public areas in its Artificial Intelligence Act (AI Act). However, that ban does not extend to EU borders, where police and border authorities plan to use highly invasive biometric identification technologies, such as handheld fingerprint or iris scanners, to register travellers from third countries and screen them against a multitude of national and international databases.

Reasons for Concern

UK citizens have plenty of reasons to be concerned about the proliferation of facial recognition cameras and other biometric surveillance and control systems. They represent an extreme infringement on privacy, personal freedoms and basic legal rights, including arguably the presumption of innocence. In fact, the use of LFR has been successfully challenged by British courts and civil liberty groups on the grounds that the technology can infringe on privacy, data protection laws (which, as I mentioned, the British government is trying to gut) and can be discriminatory.

Amnesty International puts it even more bluntly: AI-enabled remote biometric identification systems cannot co-exist with a codified system of human rights laws:

“There is no human rights compliant way to use remote biometric identification (RBI). No fixes, technical or otherwise, can make it compatible with human rights law. The only safeguard against RBI is an outright ban. If these systems are legalized, it will set an alarming and far-reaching precedent, leading to the proliferation of AI technologies that don’t comply with human rights in the future.”

Another common problem is that the internal workings of biometric surveillance tools, and how they collect, use, and store data, are often shrouded in secrecy, or at least opacity. They are also prone to biases and failure. This is particularly true of live facial recognition, as the BBC Science Focus article cautions:

Often the neural network trained to distinguish faces has been given biased data – typically as it is trained on more male white faces than other races and genders.

Researchers have shown that while accuracy of detecting white males is impressive, the biased training means that the AI is much less accurate when attempting to match females faces and of the faces of people of colour.

Facewatch CEO Simon Gordon claims that the current accuracy of the company’s camera technology is 99.85%. As such, he says, misidentification is rare and when it happens, the implications are “minor.” But then he would say that; he has a product to sell.

Lastly, the systems pose another major problem (and I encourage readers to chime in with others): they are AI-operated. As such, many of the decisions or actions taken by retailers, corporations, banks, central banks and local, regional or national authorities that affect us will be fully automated; no human intervention will be needed. That means that trying to get those decisions or actions reversed or overturned is likely to be a Kafkaesque nightmare that even Kafka may have struggled to foresee.

link

Nov 6, 2024 22:00:02 GMT -5

Nov 6, 2024 22:00:02 GMT -5

Nov 6, 2024 22:00:02 GMT -5

Nov 6, 2024 22:00:02 GMT -5